Abstract: A newly developed machine studying mannequin can predict the phrases an individual is about to talk based mostly on their neural exercise recorded by a minimally invasive neuroprosthetic gadget.

Supply: HSE

Researchers from HSE College and the Moscow State College of Drugs and Dentistry have developed a machine studying mannequin that may predict the phrase about to be uttered by a topic based mostly on their neural exercise recorded with a small set of minimally invasive electrodes.

The paper ‘Speech decoding from a small set of spatially segregated minimally invasive intracranial EEG electrodes with a compact and interpretable neural community’ has been printed within the Journal of Neural Engineering. The analysis was financed by a grant from the Russian Authorities as a part of the ‘Science and Universities’ Nationwide Venture.

Thousands and thousands of individuals worldwide are affected by speech problems limiting their means to speak. Causes of speech loss can differ and embrace stroke and sure congenital circumstances.

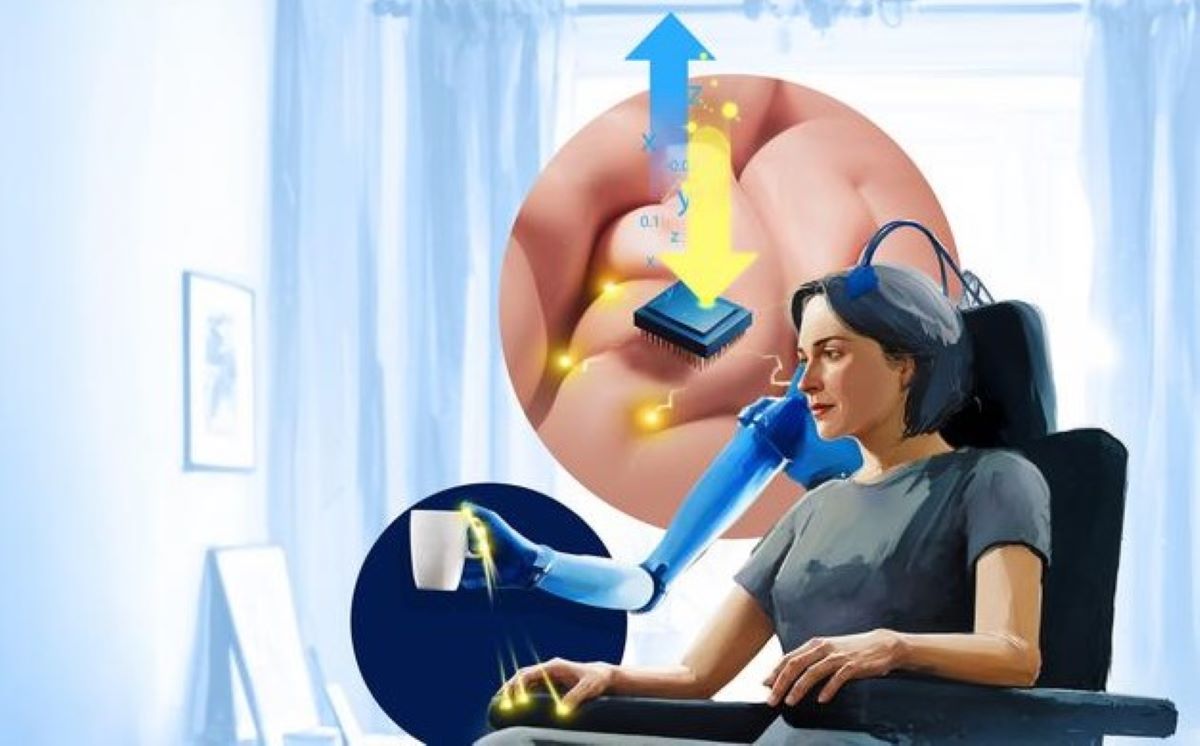

Expertise is accessible at present to revive such sufferers’ communication operate, together with ‘silent speech’ interfaces which recognise speech by monitoring the motion of articulatory muscle mass because the particular person mouths phrases with out making a sound. Nevertheless, such units assist some sufferers however not others, comparable to individuals with facial muscle paralysis.

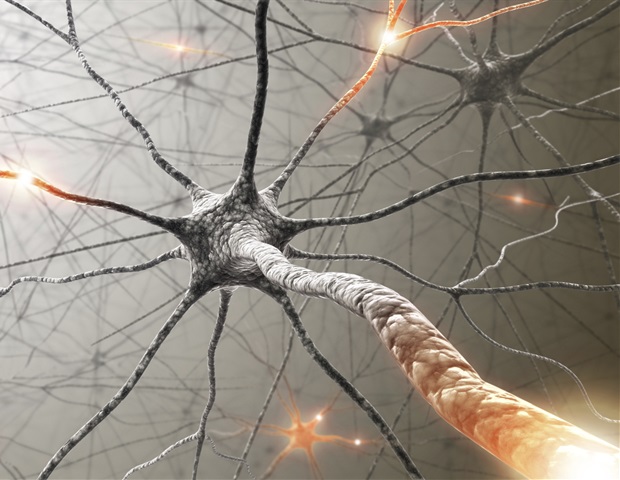

Speech neuroprostheses—brain-computer interfaces able to decoding speech based mostly on mind exercise—can present an accessible and dependable resolution for restoring communication to such sufferers.

Not like private computer systems, units with a brain-computer interface (BCI) are managed straight by the mind with out the necessity for a keyboard or a microphone.

A serious barrier to wider use of BCIs in speech prosthetics is that this know-how requires extremely invasive surgical procedure to implant electrodes within the mind tissue.

Probably the most correct speech recognition is achieved by neuroprostheses with electrodes masking a big space of the cortical floor. Nevertheless, these options for studying mind exercise are usually not meant for long-term use and current vital dangers to the sufferers.

Researchers of the HSE Centre for Bioelectric Interfaces and the Moscow State College of Drugs and Dentistry have studied the opportunity of making a functioning neuroprosthesis able to decoding speech with acceptable accuracy by studying mind exercise from a small set of electrodes implanted in a restricted cortical space.

The authors recommend that sooner or later, this minimally invasive process might even be carried out beneath native anaesthesia. Within the current research, the researchers collected knowledge from two sufferers with epilepsy who had already been implanted with intracranial electrodes for the aim of presurgical mapping to localise seizure onset zones.

The primary affected person was implanted bilaterally with a complete of 5 sEEG shafts with six contacts in every, and the second affected person was implanted with 9 electrocorticographic (ECoG) strips with eight contacts in every.

Not like ECoG, electrodes for sEEG might be implanted with out a full craniotomy by way of a drill gap within the cranium. On this research, solely the six contacts of a single sEEG shaft in a single affected person and the eight contacts of 1 ECoG strip within the different have been used to decode neural exercise.

The topics have been requested to learn aloud six sentences, every introduced 30 to 60 occasions in a randomised order. The sentences diversified in construction, and the vast majority of phrases inside a single sentence began with the identical letter. The sentences contained a complete of 26 completely different phrases. As the topics have been studying, the electrodes registered their mind exercise.

This knowledge was then aligned with the audio alerts to kind 27 courses, together with 26 phrases and one silence class. The ensuing coaching dataset (containing alerts recorded within the first 40 minutes of the experiment) was fed right into a machine studying mannequin with a neural network-based structure.

The training activity for the neural community was to foretell the subsequent uttered phrase (class) based mostly on the neural exercise knowledge previous its utterance.

In designing the neural community’s structure, the researchers wished to make it easy, compact, and simply interpretable. They got here up with a two-stage structure that first extracted inner speech representations from the recorded mind exercise knowledge, producing log-mel spectral coefficients, after which predicted a particular class, ie a phrase or silence.

Thus skilled, the neural community achieved 55% accuracy utilizing solely six channels of information recorded by a single sEEG electrode within the first affected person and 70% accuracy utilizing solely eight channels of information recorded by a single ECoG strip within the second affected person. Such accuracy is akin to that demonstrated in different research utilizing units that required electrodes to be implanted over your entire cortical floor.

The ensuing interpretable mannequin makes it doable to clarify in neurophysiological phrases which neural data contributes most to predicting a phrase about to be uttered.

The researchers examined alerts coming from completely different neuronal populations to find out which ones have been pivotal for the downstream activity.

Their findings have been in keeping with the speech mapping outcomes, suggesting that the mannequin makes use of neural alerts that are pivotal and might due to this fact be used to decode imaginary speech.

One other benefit of this resolution is that it doesn’t require handbook function engineering. The mannequin has realized to extract speech representations straight from the mind exercise knowledge.

The interpretability of outcomes additionally signifies that the community decodes alerts from the mind slightly than from any concomitant exercise, comparable to electrical alerts from the articulatory muscle mass or arising attributable to a microphone impact.

The researchers emphasise that the prediction was at all times based mostly on the neural exercise knowledge previous the utterance. This, they argue, makes certain that the choice rule didn’t use the auditory cortex’s response to speech already uttered.

“The usage of such interfaces entails minimal dangers for the affected person. If all the things works out, it could possibly be doable to decode imaginary speech from neural exercise recorded by a small variety of minimally invasive electrodes implanted in an outpatient setting with native anaesthesia”, – Alexey Ossadtchi, main writer of the research, director of the Centre for Bioelectric Interfaces of the HSE Institute for Cognitive Neuroscience.

About this neurotech analysis information

Creator: Ksenia Bregadze

Supply: HSE

Contact: Ksenia Bregadze – HSE

Picture: The picture is within the public area

Authentic Analysis: Closed entry.

“Speech decoding from a small set of spatially segregated minimally invasive intracranial EEG electrodes with a compact and interpretable neural community” by Alexey Ossadtchi et al. Journal of Neural Engineering

Summary

Speech decoding from a small set of spatially segregated minimally invasive intracranial EEG electrodes with a compact and interpretable neural community

Goal. Speech decoding, probably the most intriguing brain-computer interface purposes, opens up plentiful alternatives from rehabilitation of sufferers to direct and seamless communication between human species. Typical options depend on invasive recordings with numerous distributed electrodes implanted by way of craniotomy. Right here we explored the opportunity of creating speech prosthesis in a minimally invasive setting with a small variety of spatially segregated intracranial electrodes.

Strategy. We collected one hour of information (from two classes) in two sufferers implanted with invasive electrodes. We then used solely the contacts that pertained to a single stereotactic electroencephalographic (sEEG) shaft or an electrocorticographic (ECoG) stripe to decode neural exercise into 26 phrases and one silence class. We employed a compact convolutional network-based structure whose spatial and temporal filter weights permit for a physiologically believable interpretation.

Most important outcomes. We achieved on common 55% accuracy utilizing solely six channels of information recorded with a single minimally invasive sEEG electrode within the first affected person and 70% accuracy utilizing solely eight channels of information recorded for a single ECoG strip within the second affected person in classifying 26+1 overtly pronounced phrases. Our compact structure didn’t require the usage of pre-engineered options, realized quick and resulted in a secure, interpretable and physiologically significant resolution rule efficiently working over a contiguous dataset collected throughout a special time interval than that used for coaching. Spatial traits of the pivotal neuronal populations corroborate with lively and passive speech mapping outcomes and exhibit the inverse space-frequency relationship attribute of neural exercise. In comparison with different architectures our compact resolution carried out on par or higher than these not too long ago featured in neural speech decoding literature.

Significance. We showcase the opportunity of constructing a speech prosthesis with a small variety of electrodes and based mostly on a compact function engineering free decoder derived from a small quantity of coaching knowledge.