Summary: A new study comparing stroke survivors with healthy adults reveals that post-stroke language disorders are not due to slower hearing but rather weaker integration of speech sounds. Although patients detected sounds as quickly as controls, their brains processed speech features much less strongly, especially when the words were unclear.

Healthy listeners expanded processing during uncertainty, but stroke survivors did not, suggesting that they may abandon analyzing sounds too early to fully understand difficult words. The findings highlight neural patterns essential for verbal comprehension and point to faster, story-based diagnostic tools for language disorders.

Key facts

Weakened integration: Stroke survivors process features of speech sounds with much lower neural strength despite normal sound detection speed. Reduced persistence: When words are unclear, they do not sustain processing long enough to resolve the ambiguity. Diagnostic potential: Simple story-listening tasks can replace lengthy behavioral tests to detect language disorders.

Source: SfN

After a stroke, some people experience a language disorder that hinders their ability to process speech sounds. How do their brains change after a stroke?

Researchers led by Laura Gwilliams, a scholar at Stanford’s Wu Tsai Institute for Neuroscience and Data Science and an assistant professor in Stanford’s School of Humanities and Sciences, and Maaike Vandermosten, an associate professor in the Department of Neurosciences at KU Leuven, compared the brains of 39 post-stroke patients and 24 age-matched healthy controls to reveal brain mechanisms of language processing.

As reported in their Journal of Neuroscience article, the researchers recorded brain activity while the volunteers listened to a story.

People with verbal speech processing problems due to stroke were not slower to process speech sounds, but they had much weaker processing than healthy participants.

According to the researchers, this suggests that people with this language disorder can hear sounds of all types as well as healthy people, but have problems integrating speech sounds to understand language.

Additionally, when there was uncertainty about what words were being said, healthy people processed the features of speech sounds for longer compared to those who had had a stroke.

This could mean that after a stroke, people do not process speech sounds long enough to successfully understand words that are difficult to detect.

According to the authors, this work points out patterns of brain activity that may be crucial for understanding verbal language.

First author Jill Kries expresses her enthusiasm for continuing to explore how this simple approach (listening to a story) can be used to improve the diagnosis of conditions characterized by language processing problems, which currently involve hours of behavioral tasks.

Key questions answered:

A: Their brains detect sounds normally but integrate speech features with reduced strength, making understanding difficult even when hearing is intact.

A: Healthy listeners process sound characteristics longer to resolve ambiguity, but stroke survivors stop too soon, leading to a loss of meaning.

A: Brain recordings of story listening can provide a quick, naturalistic alternative to hours of behavioral language testing.

Editorial notes:

This article was edited by a Neuroscience News editor. Magazine article reviewed in its entirety. Additional context added by our staff.

About this stroke and speech processing research news

Author: SfN Media

Source: SfN

Contact: SfN Media – SfN

Image: Image is credited to Neuroscience News.

Original Research: Closed access.

“The spatiotemporal dynamics of phoneme coding in aging and aphasia” by Laura Gwilliams et al. Neuroscience Magazine

Abstract

The spatiotemporal dynamics of phoneme coding in aging and aphasia.

During successful linguistic comprehension, speech sounds (phonemes) are encoded within a series of neural patterns that evolve over time.

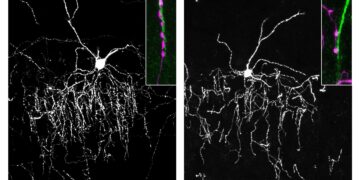

Here we test whether these neural dynamics of speech encoding are altered in people with a language disorder. We recorded EEG responses from human brains of 39 individuals with post-stroke aphasia (13♀/26♂) and 24 age-matched healthy controls (i.e., older adults; 8♀/16♂) during 25 min of listening to natural stories.

We estimated the duration of encoding of phonetic features, the rate of evolution among neuronal populations, and the spatial location of encoding via EEG sensors.

First, we establish that phonetic features are strongly encoded in the EEG responses of healthy older adults.

Second, when comparing individuals with aphasia to healthy controls, we found significantly less phonetic encoding in the aphasic group after the initial shared processing pattern (0.08–0.25 s after phoneme onset).

Phonetic features were less strongly encoded over the left lateralized electrodes in the aphasia group compared to controls, with no differences in the speed of neural pattern evolution.

Finally, we observed that healthy controls, but not individuals with aphasia, encode phonetic features longer when uncertainty about word identity is high, indicating that this mechanism (encoding phonetic information until word identity is resolved) is crucial for successful comprehension.

Taken together, our results suggest that aphasia may involve a failure to maintain lower-order information long enough to recognize lexical items.