Summary: scientists have, for the first time, the decoded internal discourse, silent thoughts of words, in the command using cerebral computer interface technology, reaching up to 74% precision. When registering the neuronal activity of the participants with severe paralysis, the team discovered that internal speech and speech attempt share the overlapping brain activity patterns, although internal speech signals are weaker.

The artificial intelligence models trained in these patterns could interpret imagined words of a vast vocabulary, and a password -based system assured the decoding only when desired. This advance could pave the way for faster and more natural communication for people who cannot speak, with potential for greater precision as technology progresses.

Key facts

Advance decoding: The internal discourse was decoded in command with up to 74% precision of brain activity. Floated brain patterns: the discourse tried and the discourse imagined activate regions overlapping in the motor cortex.

Source: Cell Press

Scientists have identified brain activity related to internal discourse, the silent monologue in people’s heads, and successfully decode it with up to 74% precision.

Publishing on August 14 at the Cell Press Journal Cell, their findings could help people who cannot speak audibly communicate more easily using cerebral computer interface (BCI) technologies that begin to translate internal thoughts when a participant says a password inside his head.

“This is the first time we manage to understand how brain activity is seen when you think about speaking,” says main author Erin Kunz from Stanford University. “For people with severe disabilities and engines, BCI capable of decoding internal discourse could help them communicate much more easily and more naturally.”

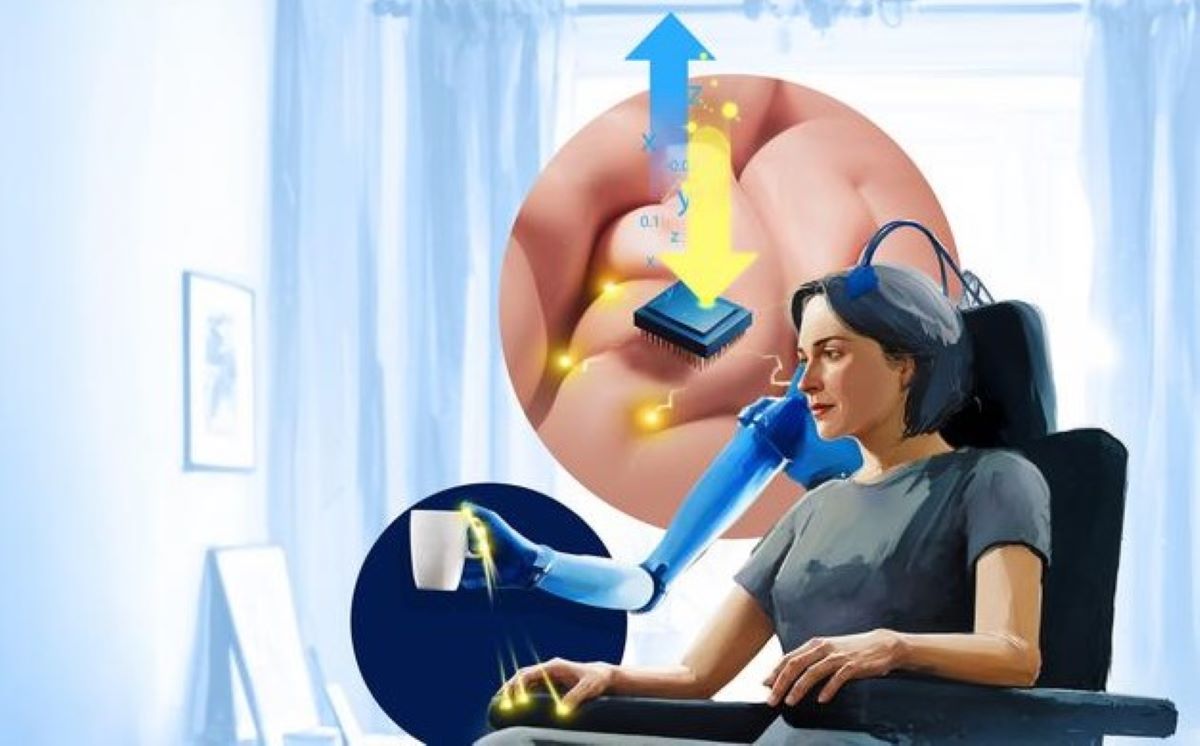

BCIs have recently emerged as a tool to help people with disabilities. Using sensors implemented in brain regions that control the movement, BCI systems can decode neuronal signals related to movement and translate them into actions, such as moving a prosthetic hand.

The investigation has shown that BCIS can even decode a speech attempt among people with paralysis. When users try to speak physically aloud involving muscles related to making sounds, BCIS can interpret the resulting brain activity and write what they try to say, even if the speech itself is unintelligible.

Although BCI -assisted communication is much faster than older technologies, including systems that track the ocular movements of users to write words, trying to speak can be exhausting and slow for people with limited muscle control.

The team wondered if BCIS could decode the internal speech.

“If you just have to think about speech instead of trying to speak, it is potentially easier and faster for people,” says Benyamin Meschen-Krasa, co-first author of the newspaper, from Stanford University.

The team recorded the neuronal activity of the microelectrodes implemented in the motor cortex, a region of the brain responsible for speaking, of four participants with severe paralysis of the amyotrophic lateral sclerosis (ALS) or a stroke of the brain trunk. The researchers asked participants to try to speak or imagine saying a set of words.

They discovered that the attempt of speech and internal speech activates overlapping regions in the brain and evoke similar patterns of neuronal activity, but internal speech tends to show a weaker magnitude of activation in general.

Using the internal speech data, the team trained artificial intelligence models to interpret the imagined words. In a demonstration of proof of concept, the BCI could decode imagined sentences of a vocabulary of up to 125,000 words with a precision rate as high as 74%.

The BCI could also collect what was never told to some internal speech participants, such as numbers when participants were asked to contact pink circles on the screen.

The team also found that while the speech attempt and internal speech produce similar patterns of neuronal activity in the motor cortex, they were different enough to distinguish themselves reliably. The main author Frank Willett of Stanford University says that researchers can use this distinction to train BCIS to completely ignore internal discourse.

For users who may want to use internal discourse as a method for faster or faster communication, the team also demonstrated a password controlled mechanism that would prevent the BCI from decoding internal discourse unless it is temporarily unlocked with a chosen keyword. In their experiment, users might think of the phrase “Chitty Chitty Bang Bang” to begin the decoding of internal discourse. The system recognized the password with more than 98% precision.

While current BCI systems cannot decode the internal discourse freely without making substantial mistakes, researchers say that the most advanced devices with more sensors and better algorithms can do so in the future.

“BCIS’s future is brilliant,” says Willett. “This work gives a real hope that BCIS speech can one day restore communication that is as fluid, natural and comfortable as conversational discourse.”

Funds:

This work was supported by the Assistant Secretary of Defense of Health Affairs, the National Institutes of Health, the Simons collaboration for the Global Brain, the AP Giannini Foundation, the Department of Veterans Affairs, the Wu Tsai Neuroscience Institute, the Howard Hughes, Larry and Pamela Garlick Medical Institute, the National Institute of Deligors and other discont Itals of the Communication of neurological and stredentnts of neurologies, and Stroke, The Eune, The Euneu Kennedy Shriver National Institute of Children and Human Health, the Blavatnik Family Foundation and the National Science Foundation.

About this research news from Neurotech and Ia

Author: Julia Grimmett

Source: Cell Press

Contact: Julia Grimmett – Cell Press

Image: The image is accredited to Neuroscience News

Original research: open access.

“Internal discourse in the motor cortex and the implications for speech neuroprosis” of Erin Kunz et al. Cell

Abstract

Internal speech in the motor cortex and implications for speech neuroprothesis

Speech Brain-Comuter interfaces (BCIS) show promise to restore communication to people with paralysis, but have also caused discussions about their potential to decode private internal discourse.

Separately, internal discourse can be a way to avoid the current approach to require the BCI speech users to physically try speech, which is fatiguent and can slow down communication.

Using recordings of a multiple unit of four participants, we discover that the internal discourse is represented in a robust way in the motor cortex and that the imagined sentences can be decoded in real time.

The representation of the internal discourse was highly correlated with the speech attempt, although we also identify a neural dimension of “motor intention” that differentiates the two.

We investigate the possibility of decoding private internal discourse and discovered that some aspects of free discourse could be decoded during withdrawal and sequence accounting tasks.

Finally, we demonstrate high fidelity strategies that prevent BCIS speaking involuntarily decoding private internal discourse.