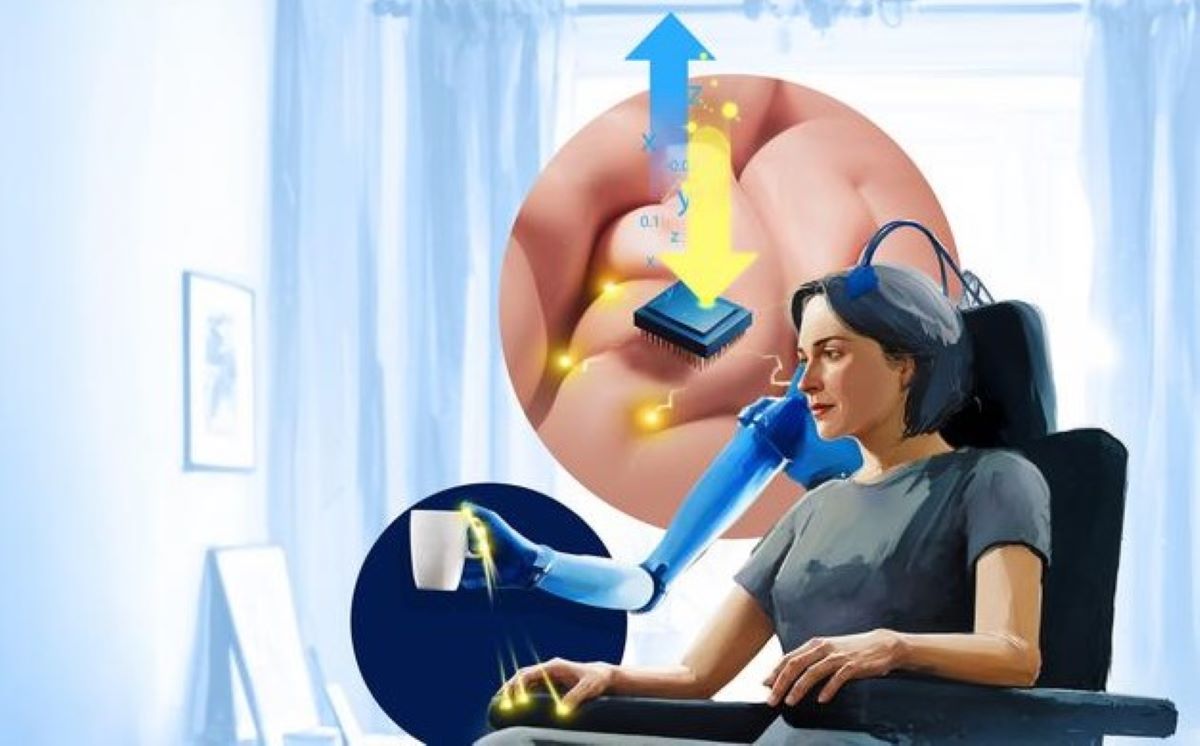

Abstract: Researchers achieved a breakthrough in changing mind alerts to audible speech with as much as 100% accuracy. The workforce used mind implants and synthetic intelligence to straight map mind exercise to speech in sufferers with epilepsy.

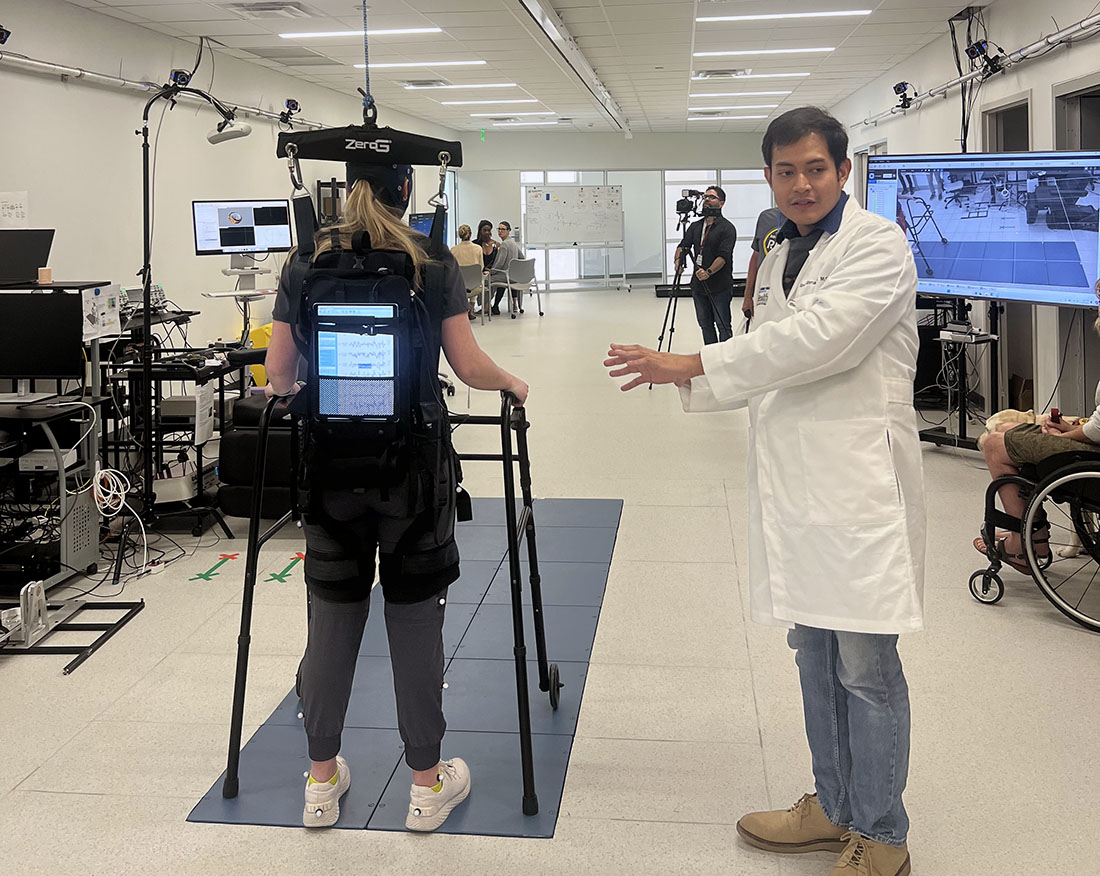

This expertise goals to offer a voice again to individuals in a locked-in state, who’re paralyzed and can’t converse. The researchers consider that the success of this mission marks a major advance within the realm of Mind-Pc Interfaces.

Key Information:

- Utilizing a mix of mind implants and AI, the researchers had been capable of predict spoken phrases with an accuracy of 92-100%.

- The workforce centered their experiments on non-paralyzed individuals with short-term mind implants, decoding what they mentioned out loud primarily based on their mind exercise.

- Whereas the expertise presently focuses on particular person phrases, future targets embrace the flexibility to foretell full sentences and paragraphs primarily based on mind exercise.

Supply: Radboud College

Researchers from Radboud College and the UMC Utrecht have succeeded in reworking mind alerts into audible speech.

By decoding alerts from the mind by a mix of implants and AI, they had been capable of predict the phrases individuals needed to say with an accuracy of 92 to 100%.

Their findings are printed within the Journal of Neural Engineering this month.

The analysis signifies a promising improvement within the area of Mind-Pc Interfaces, in response to lead writer Julia Berezutskaya, researcher at Radboud College’s Donders Institute for Mind, Cognition and Behaviour and UMC Utrecht. Berezutskaya and colleagues on the UMC Utrecht and Radboud College used mind implants in sufferers with epilepsy to deduce what individuals had been saying.

Bringing again voices

“Finally, we hope to make this expertise out there to sufferers in a locked-in state, who’re paralyzed and unable to speak,” says Berezutskaya.

“These individuals lose the flexibility to maneuver their muscle tissue, and thus to talk. By growing a brain-computer interface, we are able to analyse mind exercise and provides them a voice once more.”

For the experiment of their new paper, the researchers requested non-paralyzed individuals with short-term mind implants to talk various phrases out loud whereas their mind exercise was being measured.

Berezutskaya: “We had been then capable of set up direct mapping between mind exercise on the one hand, and speech then again. We additionally used superior synthetic intelligence fashions to translate that mind exercise straight into audible speech.

“Meaning we weren’t simply capable of guess what individuals had been saying, however we might instantly rework these phrases into intelligible, comprehensible sounds. As well as, the reconstructed speech even gave the impression of the unique speaker of their tone of voice and method of talking.”

Researchers around the globe are engaged on methods to acknowledge phrases and sentences in mind patterns.

The researchers had been capable of reconstruct intelligible speech with comparatively small datasets, exhibiting their fashions can uncover the complicated mapping between mind exercise and speech with restricted information.

Crucially, in addition they performed listening checks with volunteers to guage how identifiable the synthesized phrases had been.

The constructive outcomes from these checks point out the expertise isn’t simply succeeding at figuring out phrases accurately, but in addition at getting these phrases throughout audibly and understandably, similar to an actual voice.

Limitations

“For now, there’s nonetheless various limitations,’ warns Berezutskaya. ‘In these experiments, we requested individuals to say twelve phrases out loud, and people had been the phrases we tried to detect.

“Typically, predicting particular person phrases is simpler than predicting whole sentences. Sooner or later, massive language fashions which can be utilized in AI analysis could be helpful.

“Our objective is to foretell full sentences and paragraphs of what persons are making an attempt to say primarily based on their mind exercise alone. To get there, we’ll want extra experiments, extra superior implants, bigger datasets and superior AI fashions.

“All these processes will nonetheless take various years, but it surely appears to be like like we’re on target.”

About this AI and neurotech analysis information

Writer: Thomas Haenen

Supply: Radboud College

Contact: Thomas Haenen – Radboud College

Picture: The picture is credited to Neuroscience Information

Authentic Analysis: Open entry.

“Direct speech reconstruction from sensorimotor mind exercise with optimized deep studying fashions” by Julia Berezutskaya et al. Journal of Neural Engineering

Summary

Direct speech reconstruction from sensorimotor mind exercise with optimized deep studying fashions

Improvement of brain-computer interface (BCI) expertise is essential for enabling communication in people who’ve misplaced the college of speech as a consequence of extreme motor paralysis.

A BCI management technique that’s gaining consideration employs speech decoding from neural information.

Latest research have proven {that a} mixture of direct neural recordings and superior computational fashions can present promising outcomes.

Understanding which decoding methods ship greatest and straight relevant outcomes is essential for advancing the sector.

On this paper, we optimized and validated a decoding strategy primarily based on speech reconstruction straight from high-density electrocorticography recordings from sensorimotor cortex throughout a speech manufacturing process.

We present that 1) devoted machine studying optimization of reconstruction fashions is essential for reaching the very best reconstruction efficiency; 2) particular person phrase decoding in reconstructed speech achieves 92-100% accuracy (probability degree is 8%); 3) direct reconstruction from sensorimotor mind exercise produces intelligible speech.

These outcomes underline the necessity for mannequin optimization in reaching greatest speech decoding outcomes and spotlight the potential that reconstruction-based speech decoding from sensorimotor cortex can provide for improvement of next-generation BCI expertise for communication.