Abstract: A brand new brain-machine interface is probably the most correct to this point at predicting an individual’s inner monologue. The expertise might be used to help these with problems affecting speech to successfully talk.

Supply: CalTech

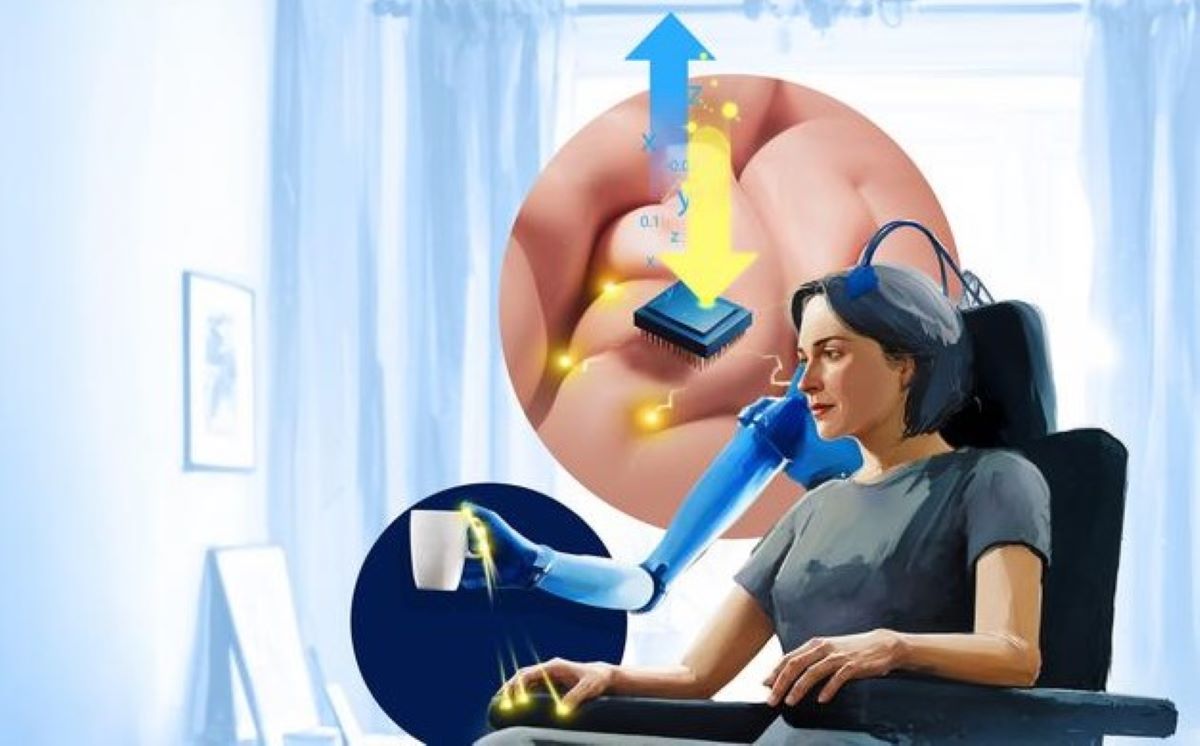

New Caltech analysis is displaying how units implanted into individuals’s brains, known as brain-machine interfaces (BMIs), may someday assist sufferers who’ve misplaced their skill to talk.

In a brand new examine introduced on the 2022 Society for Neuroscience convention in San Diego, the researchers demonstrated that they may use a BMI to precisely predict which phrases a tetraplegic participant was merely considering and never talking or miming.

“Chances are you’ll have already got seen movies of individuals with tetraplegia utilizing BMIs to manage robotic arms and palms, for instance to seize a bottle and to drink from it or to eat a bit of chocolate,” says Sarah Wandelt, a Caltech graduate pupil within the lab of Richard Andersen, James G. Boswell Professor of Neuroscience and director of the Tianqiao and Chrissy Chen Mind-Machine Interface Heart at Caltech.

“These new outcomes are promising within the areas of language and communication. We used a BMI to reconstruct speech,” says Wandelt, who introduced the outcomes on the convention on November 13.

Earlier research have had some success at predicting contributors’ speech by analyzing mind indicators recorded from motor areas when a participant whispered or mimed phrases. However predicting what any individual is considering, inner dialogue, is rather more troublesome, because it doesn’t contain any motion, explains Wandelt.

“Prior to now, algorithms that attempted to foretell inner speech have solely been in a position to predict three or 4 phrases and with low accuracy or not in actual time,” Wandelt says.

The brand new analysis is probably the most correct but at predicting inner phrases. On this case, mind indicators have been recorded from single neurons in a mind space known as the supramarginal gyrus, situated within the posterior parietal cortex. The researchers had present in a earlier examine that this mind space represents spoken phrases.

Now, the workforce has prolonged its findings to inner speech. Within the examine, the researchers first educated the BMI gadget to acknowledge the mind patterns produced when sure phrases have been spoken internally, or thought, by the tetraplegic participant. This coaching interval took about quarter-hour.

They then flashed a phrase on a display screen and requested the participant to say the phrase internally. The outcomes confirmed that the BMI algorithms have been in a position to predict eight phrases with an accuracy as much as 91 p.c.

The work remains to be preliminary however may assist sufferers with mind accidents, paralysis, or illnesses similar to amyotrophic lateral sclerosis (ALS) that have an effect on speech.

“Neurological problems can result in full paralysis of voluntary muscular tissues, leading to sufferers being unable to talk or transfer, however they’re nonetheless in a position to assume and motive. For that inhabitants, an inner speech BMI could be extremely useful,” Wandelt says.

“Now we have beforehand proven that we are able to decode imagined hand shapes for greedy from the human supramarginal gyrus,” says Andersen. “Having the ability to additionally decode speech from this space means that one implant can recuperate two essential human talents: greedy and speech.”

The researchers additionally level out that the BMIs can’t be used to learn individuals’s minds; the gadget would have to be educated in every particular person’s mind individually, and so they solely work when an individual focuses on the phrase.

Different Caltech examine authors in addition to Wandelt and Andersen embrace David Bjanes, Kelsie Pejsa, Brian Lee, and Charles Liu. Lee and Liu are Caltech visiting associates who’re on the college of the Keck College of Drugs at USC.

About this neurotech analysis information

Creator: Whitney Clavin

Supply: CalTech

Contact: Whitney Clavin – CalTech

Picture: The picture is within the public area

Authentic Analysis: Closed entry.

“On-line inner speech decoding from single neurons in a human participant” by Sarah Wandelt et al. MedRxiv

Summary

On-line inner speech decoding from single neurons in a human participant

Speech brain-machine interfaces (BMI’s) translate mind indicators into phrases or audio outputs, enabling communication for individuals having misplaced their speech talents resulting from illnesses or injury.

Whereas essential advances in vocalized, tried, and mimed speech decoding have been achieved, outcomes for inner speech decoding are sparse, and have but to realize excessive performance. Notably, it’s nonetheless unclear from which mind areas inner speech will be decoded.

On this work, a tetraplegic participant with implanted microelectrode arrays situated within the supramarginal gyrus (SMG) and first somatosensory cortex (S1) carried out inner and vocalized speech of six phrases and two pseudowords.

We discovered strong inner speech decoding from SMG single neuron exercise, attaining as much as 91% classification accuracy throughout a web-based activity (likelihood degree 12.5%).

Proof of shared neural representations between inner speech, phrase studying, and vocalized speech processes have been discovered. SMG represented phrases in numerous languages (English/ Spanish) in addition to pseudowords, offering proof for phonetic encoding.

Moreover, our decoder achieved excessive classification with a number of inner speech methods (auditory creativeness/ visible creativeness). Exercise in S1 was modulated by vocalized however not inner speech, suggesting no articulator actions of the vocal tract occurred throughout inner speech manufacturing.

This works represents the primary proof-of-concept for a high-performance inner speech BMI.