Abstract: Researchers created a groundbreaking brain-computer interface (BCI) that permits a paralyzed girl to speak via a digital avatar. This development marks the first-ever synthesis of speech or facial expressions immediately from mind indicators.

The system can convert these indicators to textual content at a formidable charge of almost 80 phrases per minute, surpassing current applied sciences. The examine presents a big leap in direction of restoring complete communication for paralyzed people.

Key Information:

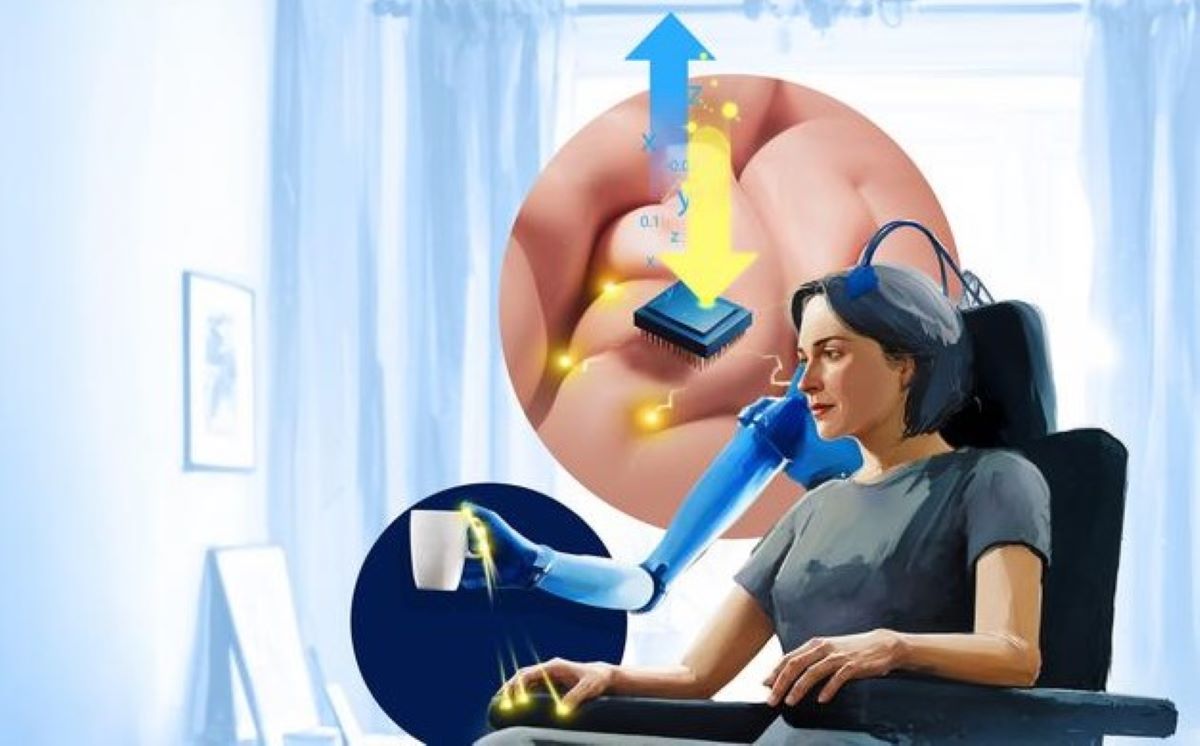

- The BCI developed decodes mind indicators into synthesized speech and facial expressions, enabling paralyzed people to speak extra naturally.

- As an alternative of recognizing entire phrases, the system identifies phonemes, the sub-units of speech, enhancing velocity and accuracy.

- The digital avatar’s voice was customized to reflect the person’s voice pre-injury, and facial animations had been pushed by software program that interpreted the mind’s indicators for numerous facial expressions.

Supply: UCSF

Researchers at UC San Francisco and UC Berkeley have developed a brain-computer interface (BCI) that has enabled a lady with extreme paralysis from a brainstem stroke to talk via a digital avatar.

It’s the first time that both speech or facial expressions have been synthesized from mind indicators. The system may decode these indicators into textual content at almost 80 phrases per minute, an unlimited enchancment over commercially out there expertise.

Edward Chang, MD, chair of neurological surgical procedure at UCSF, who has labored on the expertise, often known as a mind pc interface, or BCI, for greater than a decade, hopes this newest analysis breakthrough, showing Aug. 23, 2023, in Nature, will result in an FDA-approved system that allows speech from mind indicators within the close to future.

“Our objective is to revive a full, embodied manner of speaking, which is actually probably the most pure manner for us to speak with others,” stated Chang, who’s a member of the UCSF Weill Institute for Neuroscience and the Jeanne Robertson Distinguished Professor in Psychiatry.

“These developments convey us a lot nearer to creating this an actual resolution for sufferers.”

Chang’s staff beforehand demonstrated it was attainable to decode mind indicators into textual content in a person who had additionally skilled a brainstem stroke a few years earlier. The present examine demonstrates one thing extra formidable: decoding mind indicators into the richness of speech, together with the actions that animate an individual’s face throughout dialog.

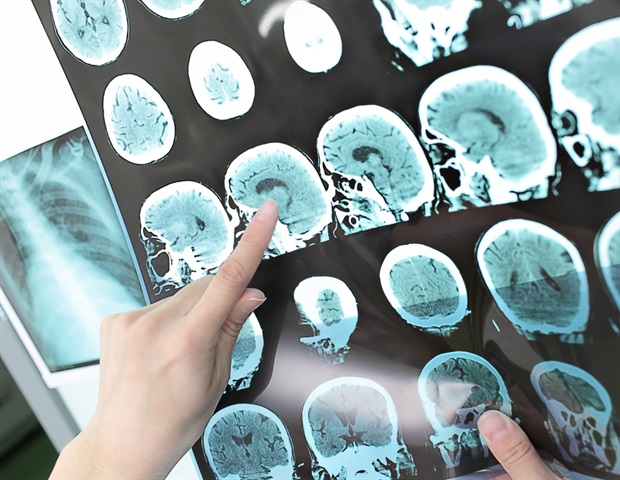

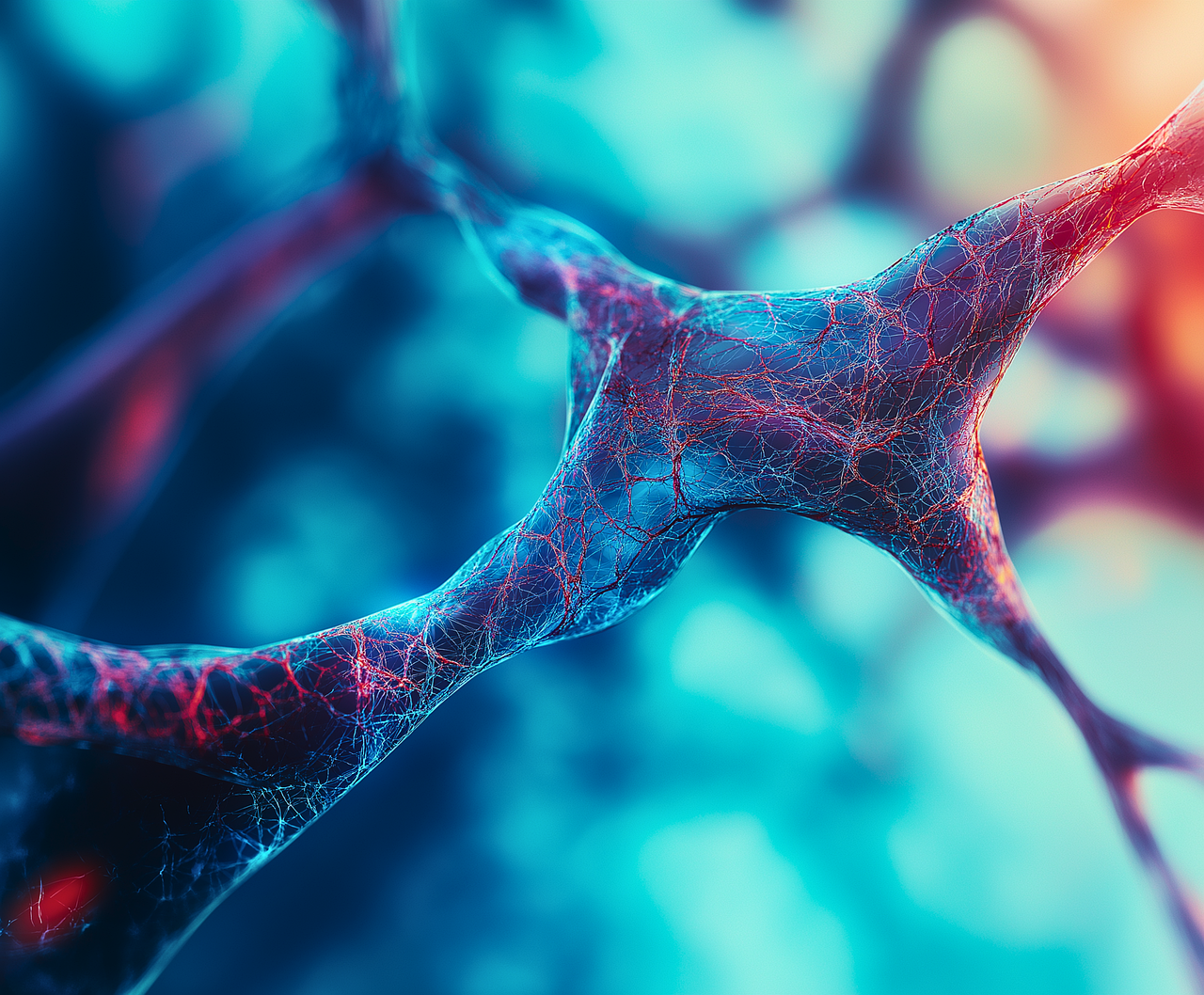

Chang implanted a paper-thin rectangle of 253 electrodes onto the floor of the lady’s mind over areas his staff has found are important for speech. The electrodes intercepted the mind indicators that, if not for the stroke, would have gone to muscle groups in her, tongue, jaw and larynx, in addition to her face. A cable, plugged right into a port fastened to her head, linked the electrodes to a financial institution of computer systems.

For weeks, the participant labored with the staff to coach the system’s synthetic intelligence algorithms to acknowledge her distinctive mind indicators for speech. This concerned repeating totally different phrases from a 1,024-word conversational vocabulary again and again, till the pc acknowledged the mind exercise patterns related to the sounds.

Slightly than practice the AI to acknowledge entire phrases, the researchers created a system that decodes phrases from phonemes. These are the sub-units of speech that kind spoken phrases in the identical manner that letters kind written phrases. “Good day,” for instance, accommodates 4 phonemes: “HH,” “AH,” “L” and “OW.”

Utilizing this strategy, the pc solely wanted to study 39 phonemes to decipher any phrase in English. This each enhanced the system’s accuracy and made it thrice quicker.

“The accuracy, velocity and vocabulary are essential,” stated Sean Metzger, who developed the textual content decoder with Alex Silva, each graduate college students within the joint Bioengineering Program at UC Berkeley and UCSF. “It’s what offers a person the potential, in time, to speak virtually as quick as we do, and to have rather more naturalistic and regular conversations.”

To create the voice, the staff devised an algorithm for synthesizing speech, which they customized to sound like her voice earlier than the injury, utilizing a recording of her talking at her wedding ceremony.

The staff animated the avatar with the assistance of software program that simulates and animates muscle actions of the face, developed by Speech Graphics, an organization that makes AI-driven facial animation.

The researchers created personalized machine-learning processes that allowed the corporate’s software program to mesh with indicators being despatched from the lady’s mind as she was attempting to talk and convert them into the actions on the avatar’s face, making the jaw open and shut, the lips protrude and purse and the tongue go up and down, in addition to the facial actions for happiness, unhappiness and shock.

“We’re making up for the connections between the mind and vocal tract which were severed by the stroke,” stated Kaylo Littlejohn, a graduate pupil working with Chang and Gopala Anumanchipalli, PhD, a professor {of electrical} engineering and pc sciences at UC Berkeley.

“When the topic first used this method to talk and transfer the avatar’s face in tandem, I knew that this was going to be one thing that will have an actual affect.”

An essential subsequent step for the staff is to create a wi-fi model that will not require the person to be bodily linked to the BCI.

“Giving folks the flexibility to freely management their very own computer systems and telephones with this expertise would have profound results on their independence and social interactions,” stated co-first writer David Moses, PhD, an adjunct professor in neurological surgical procedure.

Authors: Further authors embody Ran Wang, Maximilian Dougherty, Jessie Liu, delyn Tu-Chan, and Karunesh Ganguly of UCSF, Peter Wu and Inga Zhuravleva of UC Berkeley, and Michael Berger of Speech Graphics.

Funding: This analysis was supported by the Nationwide Institutes of Health (NINDS 5U01DC018671, T32GM007618), the Nationwide Science Basis, and philanthropy.

About this AI and neurotech analysis information

Creator: Robin Marks

Supply: UCSF

Contact: Robin Marks – UCSF

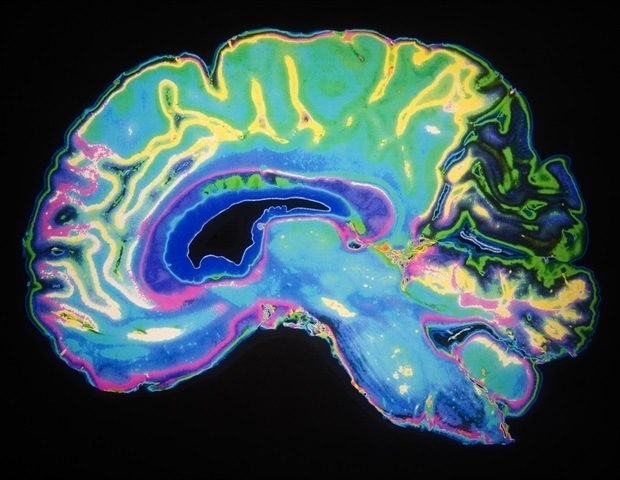

Picture: The picture is credited to Neuroscience Information

Unique Analysis: Open entry.

“An analog-AI chip for energy-efficient speech recognition and transcription” by Edward Chang et al. Nature

Summary

An analog-AI chip for energy-efficient speech recognition and transcription

Fashions of synthetic intelligence (AI) which have billions of parameters can obtain excessive accuracy throughout a spread of duties, however they exacerbate the poor vitality effectivity of typical general-purpose processors, equivalent to graphics processing items or central processing items.

Analog in-memory computing (analog-AI) can present higher vitality effectivity by performing matrix–vector multiplications in parallel on ‘reminiscence tiles’.

Nonetheless, analog-AI has but to show software-equivalent (SWeq) accuracy on fashions that require many such tiles and environment friendly communication of neural-network activations between the tiles.

Right here we current an analog-AI chip that mixes 35 million phase-change reminiscence units throughout 34 tiles, massively parallel inter-tile communication and analog, low-power peripheral circuitry that may obtain as much as 12.4 tera-operations per second per watt (TOPS/W) chip-sustained efficiency.

We show totally end-to-end SWeq accuracy for a small keyword-spotting community and near-SWeq accuracy on the a lot bigger MLPerf recurrent neural-network transducer (RNNT), with greater than 45 million weights mapped onto greater than 140 million phase-change reminiscence units throughout 5 chips.